This post describes how to prepare the shared storage for Oracle RAC

1. Shared Disk Layout

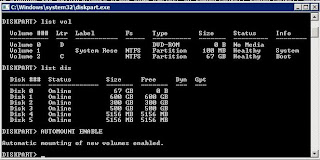

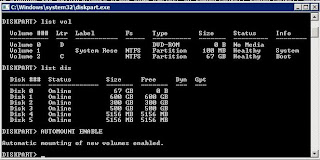

2. Enable Automounting of disks on Windows

3. Clean the Shared Disks

4. Create Logical partitions inside Extended partitions

5. Drive Letters

6. View Disks

7. Marking Disk Partitions for use by ASM

8. Verify Clusterware Installation Readiness

Shared Disk Layout

(assumes you are doing these task on node1)

It is assumed that the two nodes have local disk primarily for the operating system and the local Oracle Homes. Labelled C: The Oracle Grid Infrastructure software also resides on the local disks on each node. The 2 nodes must also share some central disks. This disk must not have cache enabled at the node level. i.e. if the HBA (Host Bus Adapter) drivers support caching of reads/writes it should be disabled. If the SAN supports caching that is visible to all nodes then this can be enabled.

Grid Infrastructure Shared Storage

For those who wish to utilize Oracle supplied redundancy for the OCR and Voting disks you could create a

For demonstration purposes within this post, I will be using the 2 diskgroups.

ASM Shared Storage

It is recommended that ALL ASM disks within a disk group are of the same size and carry the same

performance characteristics. Whenever possible Oracle also recommends sticking to the SAME (Stripe And

Mirror Everything) methodology by using RAID 1+0. If SAN level redundancy is available, external

redundancy should be used for database storage on ASM.

We will use the diskpart command line tool to manage these LUNs. You must create logical

drives inside of extended partitions for the disks to be used by Oracle Grid Infrastructure and Oracle ASM.

There must be no drive letters assigned to any of the Disks1 – Disk10 on any node. For MIcrosoft Windows 2003 it is possible to use diskmgmt.msc instead of diskpart (as used in the following sections) to create these partitions. For Microsoft Windows 2008, diskmgmt.msc cannot be used instead of diskpart to create these partitions.

Enable Automount

You must enable automounting of disks for them to be visible to Oracle Grid Infrastructure. On each node log in as someone with Administrator privileges then Click START->RUN and type diskpart

Clean the Shared Disks

You may want to clean your shared disks before starting the install. Cleaning will remove data from any

previous failed install. But see a later Appendix for coping with failed installs. On Node1 from within diskpart

you should clean each of the disks. WARNING this will destroy all of the data on the disk. Do not select

the disk containing the operating system or you will have to reinstall the OS Cleaning the disk ‘scrubs’ every block on the disk. This may take some time to complete.

DISKPART> select disk 2

Disk 2 is now the selected disk.

DISKPART> clean all

Create Logical partitions inside Extended partitions

Assuming the disks you are going to use are completely empty you must create an extended partition and then inside that partition a logical partition. In the following example, for Oracle Grid Infrastructure, I have

dedicated LUNS for each device.

List Drive Letters

Diskpart should not add drive letters to the partitions on the local node. The partitions on the other node may

have drive letters assigned. You must remove them. On earlier versions of Windows 2003 a reboot of the

‘other’ node will be required for the new partitions to become visible. Windows 2003 SP2 and Windows 2008 do not suffer from this issue.

Remove Drive Letters

You need to remove the drive letters other than c: (only related to your shared storage)

DISKPART> select volume 2

Volume 2 is the selected volume.

DISKPART> remov

DiskPart successfully removed the drive letter or mount point.

List volumes on Second node

You should check that none of the RAW partitions have drive letters assigned. if you don't see the impact what you did on node 1 , rescan on diskpart.

Marking Disk Partitions for use by ASM

The only partitions that the Oracle Universal Installer acknowledges on Windows systems are logical drives

Review the summary screen and click Next.

On the final screen, click Finish to update the ASM Disk Labels.

Repeat these steps for all ASM disks that will differ in their label prefix.

Verify Grid Infrastructure Installation Readiness

Though CLUVFY is packaged with the Grid Infrastructure installation media, it is recommended to download and run the latest version of CLUVFY. The latest version of the CLUVFY utility can be downloaded from: http://otn.oracle.com/rac

Once the latest version of the CLUVFY has been downloaded and installed, execute it as follows to perform

the Grid Infrastructure pre-installation verification:

Login to the server in which the installation will be performed as the Local Administrator.

Open a command prompt and run CLUVFY as follows to perform the Oracle Clusterware pre-installation verification:

Performing post-checks for hardware and operating system setup

Checking node reachability...

Check: Node reachability from node "P-HQ-CL-OR-11"

Destination Node Reachable?

------------------------------------ ------------------------

P-HQ-CL-OR-11 yes

P-HQ-CL-OR-12 yes

Result: Node reachability check passed from node "P-HQ-CL-OR-11"

Checking user equivalence...

Check: User equivalence for user "Administrator"

Node Name Comment

------------------------------------ ------------------------

P-HQ-CL-OR-12 passed

P-HQ-CL-OR-11 passed

Result: User equivalence check passed for user "Administrator"

Checking node connectivity...

Interface information for node "P-HQ-CL-OR-12"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

public 192.168.26.12 192.168.26.0 On-link UNKNOWN 00:1A:A0:34:91:0A 1500

public 192.168.26.14 192.168.26.0 On-link UNKNOWN 00:1A:A0:34:91:0A 1500

private 10.11.11.2 10.11.11.0 On-link UNKNOWN 00:1A:A0:34:91:0C 1500

Interface information for node "P-HQ-CL-OR-11"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

public 192.168.26.11 192.168.26.0 On-link UNKNOWN 00:1D:09:0C:B4:CD 1500

public 192.168.26.13 192.168.26.0 On-link UNKNOWN 00:1D:09:0C:B4:CD 1500

private 10.11.11.1 10.11.11.0 On-link UNKNOWN 00:1D:09:0C:B4:CF 1500

Check: Node connectivity of subnet "192.168.26.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-12[192.168.26.12] P-HQ-CL-OR-12[192.168.26.14] yes

P-HQ-CL-OR-12[192.168.26.12] P-HQ-CL-OR-11[192.168.26.11] yes

P-HQ-CL-OR-12[192.168.26.12] P-HQ-CL-OR-11[192.168.26.13] yes

P-HQ-CL-OR-12[192.168.26.14] P-HQ-CL-OR-11[192.168.26.11] yes

P-HQ-CL-OR-12[192.168.26.14] P-HQ-CL-OR-11[192.168.26.13] yes

P-HQ-CL-OR-11[192.168.26.11] P-HQ-CL-OR-11[192.168.26.13] yes

Result: Node connectivity passed for subnet "192.168.26.0" with node(s) P-HQ-CL-OR-12,P-HQ-CL-OR-11

Check: TCP connectivity of subnet "192.168.26.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-11:192.168.26.11 P-HQ-CL-OR-12:192.168.26.12 passed

P-HQ-CL-OR-11:192.168.26.11 P-HQ-CL-OR-12:192.168.26.14 passed

P-HQ-CL-OR-11:192.168.26.11 P-HQ-CL-OR-11:192.168.26.13 passed

Result: TCP connectivity check passed for subnet "192.168.26.0"

Check: Node connectivity of subnet "10.11.11.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-12[10.11.11.2] P-HQ-CL-OR-11[10.11.11.1] yes

Result: Node connectivity passed for subnet "10.11.11.0" with node(s) P-HQ-CL-OR-12,P-HQ-CL-OR-11

Check: TCP connectivity of subnet "10.11.11.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-11:10.11.11.1 P-HQ-CL-OR-12:10.11.11.2 passed

Result: TCP connectivity check passed for subnet "10.11.11.0"

Interfaces found on subnet "192.168.26.0" that are likely candidates for a private interconnect are:

P-HQ-CL-OR-12 public:192.168.26.12 public:192.168.26.14

P-HQ-CL-OR-11 public:192.168.26.11 public:192.168.26.13

Interfaces found on subnet "10.11.11.0" that are likely candidates for a private interconnect are:

P-HQ-CL-OR-12 private:10.11.11.2

P-HQ-CL-OR-11 private:10.11.11.1

WARNING:

Could not find a suitable set of interfaces for VIPs

Result: Node connectivity check passed

Check: Time zone consistency

Result: Time zone consistency check passed

Checking shared storage accessibility...

Disk Partition Sharing Nodes (2 in count)

------------------------------------ ------------------------

\Device\Harddisk2\Partition1 P-HQ-CL-OR-12 P-HQ-CL-OR-11

Disk Partition Sharing Nodes (2 in count)

------------------------------------ ------------------------

\Device\Harddisk3\Partition1 P-HQ-CL-OR-12 P-HQ-CL-OR-11

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

\Device\Harddisk4 P-HQ-CL-OR-12 P-HQ-CL-OR-11

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

\Device\Harddisk5 P-HQ-CL-OR-12 P-HQ-CL-OR-11

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

\Device\Harddisk6 P-HQ-CL-OR-12 P-HQ-CL-OR-11

Shared storage check was successful on nodes "P-HQ-CL-OR-12,P-HQ-CL-OR-11"

Post-check for hardware and operating system setup was successful.

cluvfy stage -pre crsinst -n P-HQ-CL-OR-11,P-HQ-CL-OR-12 -verbose > c:\temp\precrsinst8.txt

Performing pre-checks for cluster services setup

Checking node reachability...

Check: Node reachability from node "P-HQ-CL-OR-11"

Destination Node Reachable?

------------------------------------ ------------------------

P-HQ-CL-OR-11 yes

P-HQ-CL-OR-12 yes

Result: Node reachability check passed from node "P-HQ-CL-OR-11"

Checking user equivalence...

Check: User equivalence for user "Inam"

Node Name Comment

------------------------------------ ------------------------

P-HQ-CL-OR-12 passed

P-HQ-CL-OR-11 passed

Result: User equivalence check passed for user "Inam"

Checking node connectivity...

Interface information for node "P-HQ-CL-OR-12"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

public 192.168.26.12 192.168.26.0 On-link UNKNOWN 00:1A:A0:34:91:0A 1500

private 10.11.11.2 10.11.11.0 On-link UNKNOWN 00:1A:A0:34:91:0C 1500

Interface information for node "P-HQ-CL-OR-11"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

public 192.168.26.11 192.168.26.0 On-link UNKNOWN 00:1D:09:0C:B4:CD 1500

private 10.11.11.1 10.11.11.0 On-link UNKNOWN 00:1D:09:0C:B4:CF 1500

Check: Node connectivity of subnet "192.168.26.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-12[192.168.26.12] P-HQ-CL-OR-11[192.168.26.11] yes

Result: Node connectivity passed for subnet "192.168.26.0" with node(s) P-HQ-CL-OR-12,P-HQ-CL-OR-11

Check: TCP connectivity of subnet "192.168.26.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-11:192.168.26.11 P-HQ-CL-OR-12:192.168.26.12 passed

Result: TCP connectivity check passed for subnet "192.168.26.0"

Check: Node connectivity of subnet "10.11.11.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-12[10.11.11.2] P-HQ-CL-OR-11[10.11.11.1] yes

Result: Node connectivity passed for subnet "10.11.11.0" with node(s) P-HQ-CL-OR-12,P-HQ-CL-OR-11

Check: TCP connectivity of subnet "10.11.11.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-11:10.11.11.1 P-HQ-CL-OR-12:10.11.11.2 passed

Result: TCP connectivity check passed for subnet "10.11.11.0"

Interfaces found on subnet "192.168.26.0" that are likely candidates for a private interconnect are:

P-HQ-CL-OR-12 public:192.168.26.12

P-HQ-CL-OR-11 public:192.168.26.11

Interfaces found on subnet "10.11.11.0" that are likely candidates for a private interconnect are:

P-HQ-CL-OR-12 private:10.11.11.2

P-HQ-CL-OR-11 private:10.11.11.1

WARNING:

Could not find a suitable set of interfaces for VIPs

Result: Node connectivity check passed

Check: Total memory

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

P-HQ-CL-OR-12 15.9951GB (1.6772032E7KB) 922MB (944128.0KB) passed

P-HQ-CL-OR-11 15.9951GB (1.6772032E7KB) 922MB (944128.0KB) passed

Result: Total memory check passed

Check: Available memory

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

P-HQ-CL-OR-12 14.6672GB (1.5379624E7KB) 50MB (51200.0KB) passed

P-HQ-CL-OR-11 14.5223GB (1.5227736E7KB) 50MB (51200.0KB) passed

Result: Available memory check passed

Check: Swap space

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

P-HQ-CL-OR-12 31.9883GB (3.3542216E7KB) 15.9951GB (1.6772032E7KB) passed

P-HQ-CL-OR-11 31.9883GB (3.3542216E7KB) 15.9951GB (1.6772032E7KB) passed

Result: Swap space check passed

Check: Free disk space for "P-HQ-CL-OR-12:C:\Windows\temp"

Path Node Name Mount point Available Required Comment

---------------- ------------ ------------ ------------ ------------ ------------

C:\Windows\temp P-HQ-CL-OR-12 C 36.2099GB 1GB passed

Result: Free disk space check passed for "P-HQ-CL-OR-12:C:\Windows\temp"

Check: Free disk space for "P-HQ-CL-OR-11:C:\Windows\temp"

Path Node Name Mount point Available Required Comment

---------------- ------------ ------------ ------------ ------------ ------------

C:\Windows\temp P-HQ-CL-OR-11 C 42.631GB 1GB passed

Result: Free disk space check passed for "P-HQ-CL-OR-11:C:\Windows\temp"

Check: System architecture

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

P-HQ-CL-OR-12 64-bit 64-bit passed

P-HQ-CL-OR-11 64-bit 64-bit passed

Result: System architecture check passed

Checking length of value of environment variable "PATH"

Check: Length of value of environment variable "PATH"

Node Name Set? Maximum Length Actual Length Comment

---------------- ------------ ------------ ------------ ----------------

P-HQ-CL-OR-12 yes 1023 336 passed

P-HQ-CL-OR-11 yes 1023 345 passed

Result: Check for length of value of environment variable "PATH" passed.

Checking for Media Sensing status of TCP/IP

Node Name Enabled? Comment

------------ ------------------------ ------------------------

P-HQ-CL-OR-12 no passed

P-HQ-CL-OR-11 no passed

Result: Media Sensing status of TCP/IP check passed

Starting Clock synchronization checks using Network Time Protocol(NTP)...

Checking daemon liveness...

Check: Liveness for "W32Time"

Node Name Running?

------------------------------------ ------------------------

P-HQ-CL-OR-12 yes

P-HQ-CL-OR-11 yes

Result: Liveness check passed for "W32Time"

Check for NTP daemon or service alive passed on all nodes

Result: Clock synchronization check using Network Time Protocol(NTP) passed

Checking if current user is a domain user...

Check: If user "Inam" is a domain user

Result: User "Inam" is a part of the domain "DOMAIN_NAME"

Check: Time zone consistency

Result: Time zone consistency check passed

Checking for status of Automount feature

Node Name Enabled? Comment

------------ ------------------------ ------------------------

P-HQ-CL-OR-12 yes passed

P-HQ-CL-OR-11 yes passed

Result: Check for status of Automount feature passed

Pre-check for cluster services setup was successful.

Note:If any errors are encountered, these issues should be investigated and resolved before proceeding with the installation.

1. Shared Disk Layout

2. Enable Automounting of disks on Windows

3. Clean the Shared Disks

4. Create Logical partitions inside Extended partitions

5. Drive Letters

6. View Disks

7. Marking Disk Partitions for use by ASM

8. Verify Clusterware Installation Readiness

Shared Disk Layout

(assumes you are doing these task on node1)

It is assumed that the two nodes have local disk primarily for the operating system and the local Oracle Homes. Labelled C: The Oracle Grid Infrastructure software also resides on the local disks on each node. The 2 nodes must also share some central disks. This disk must not have cache enabled at the node level. i.e. if the HBA (Host Bus Adapter) drivers support caching of reads/writes it should be disabled. If the SAN supports caching that is visible to all nodes then this can be enabled.

Grid Infrastructure Shared Storage

With Oracle 11gR2 it is considered a best practice to store the OCR and Voting Disk within ASM and to

maintain the ASM best practice of having no more than 2 diskgroups (Flash Recovery Area and Database Area). This means that the OCR and Voting disk will be stored along with the database related files. If you are utilizing external redundancy for your disk groups this means you will have 1 Voting Disk and 1 OCR.For those who wish to utilize Oracle supplied redundancy for the OCR and Voting disks you could create a

separate (3rd) ASM Diskgroup having a minimum of 2 fail groups (total of 3 disks). This configuration will

provide 3 multiplexed copies of the Voting Disk and a single OCR which takes on the redundancy of that disk group (mirrored within ASM). The minimum size of the 3 disks that make up this diskgroup is 1GB. This diskgroup will also be used to store the ASM SPFILE.For demonstration purposes within this post, I will be using the 2 diskgroups.

ASM Shared Storage

It is recommended that ALL ASM disks within a disk group are of the same size and carry the same

performance characteristics. Whenever possible Oracle also recommends sticking to the SAME (Stripe And

Mirror Everything) methodology by using RAID 1+0. If SAN level redundancy is available, external

redundancy should be used for database storage on ASM.

We will use the diskpart command line tool to manage these LUNs. You must create logical

drives inside of extended partitions for the disks to be used by Oracle Grid Infrastructure and Oracle ASM.

There must be no drive letters assigned to any of the Disks1 – Disk10 on any node. For MIcrosoft Windows 2003 it is possible to use diskmgmt.msc instead of diskpart (as used in the following sections) to create these partitions. For Microsoft Windows 2008, diskmgmt.msc cannot be used instead of diskpart to create these partitions.

Enable Automount

You may want to clean your shared disks before starting the install. Cleaning will remove data from any

previous failed install. But see a later Appendix for coping with failed installs. On Node1 from within diskpart

you should clean each of the disks. WARNING this will destroy all of the data on the disk. Do not select

the disk containing the operating system or you will have to reinstall the OS Cleaning the disk ‘scrubs’ every block on the disk. This may take some time to complete.

DISKPART> select disk 2

Disk 2 is now the selected disk.

DISKPART> clean all

Create Logical partitions inside Extended partitions

Assuming the disks you are going to use are completely empty you must create an extended partition and then inside that partition a logical partition. In the following example, for Oracle Grid Infrastructure, I have

dedicated LUNS for each device.

Diskpart should not add drive letters to the partitions on the local node. The partitions on the other node may

have drive letters assigned. You must remove them. On earlier versions of Windows 2003 a reboot of the

‘other’ node will be required for the new partitions to become visible. Windows 2003 SP2 and Windows 2008 do not suffer from this issue.

Remove Drive Letters

You need to remove the drive letters other than c: (only related to your shared storage)

DISKPART> select volume 2

Volume 2 is the selected volume.

DISKPART> remov

DiskPart successfully removed the drive letter or mount point.

List volumes on Second node

You should check that none of the RAW partitions have drive letters assigned. if you don't see the impact what you did on node 1 , rescan on diskpart.

Marking Disk Partitions for use by ASM

The only partitions that the Oracle Universal Installer acknowledges on Windows systems are logical drives

that are created on top of extended partitions and that have been stamped as candidate ASM disks. Therefore prior to running the OUI the disks that are to be used by Oracle RAC MUST be stamped using ASM Tool. ASM Tool is available in two different flavors, command line (asmtool) and graphical (asmtoolg). Both utilities can be found under the asmtool directory within the Grid Infrastructure installation media. For this installation, asmtoolg will be used to stamp the ASM disks.

- Within Windows Explorer navigate to the asmtool directory within the Grid Infrastructure installation media and double click the asmtoolg.exeexecutable.

- Within ASM Tool GUI, select Add or Change Label and clickNext

Perform this task as follows running as administrator:

|

| choose your own prefixes while stamping |

Verify Grid Infrastructure Installation Readiness

Prior to installing Grid Infrastructure it is highly recommended to run the cluster verification utility

(CLUVFY) to verify that the cluster nodes have been properly configured for a successful Oracle Grid

Infrastructure installation. There are various levels at which CLUVFY can be run, at this stage it should be run in the CRS pre-installation mode. Later CLUVFY will be run in pre dbinst mode to validate the readiness for the RDBMS software installation.

Though CLUVFY is packaged with the Grid Infrastructure installation media, it is recommended to download and run the latest version of CLUVFY. The latest version of the CLUVFY utility can be downloaded from: http://otn.oracle.com/rac

Once the latest version of the CLUVFY has been downloaded and installed, execute it as follows to perform

the Grid Infrastructure pre-installation verification:

Login to the server in which the installation will be performed as the Local Administrator.

Open a command prompt and run CLUVFY as follows to perform the Oracle Clusterware pre-installation verification:

cluvfy stage -post hwos -n P-HQ-CL-OR-11,P-HQ-CL-OR-12 -verbose > c:\temp\networkStorageVerification.txt

Performing post-checks for hardware and operating system setup

Checking node reachability...

Check: Node reachability from node "P-HQ-CL-OR-11"

Destination Node Reachable?

------------------------------------ ------------------------

P-HQ-CL-OR-11 yes

P-HQ-CL-OR-12 yes

Result: Node reachability check passed from node "P-HQ-CL-OR-11"

Checking user equivalence...

Check: User equivalence for user "Administrator"

Node Name Comment

------------------------------------ ------------------------

P-HQ-CL-OR-12 passed

P-HQ-CL-OR-11 passed

Result: User equivalence check passed for user "Administrator"

Checking node connectivity...

Interface information for node "P-HQ-CL-OR-12"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

public 192.168.26.12 192.168.26.0 On-link UNKNOWN 00:1A:A0:34:91:0A 1500

public 192.168.26.14 192.168.26.0 On-link UNKNOWN 00:1A:A0:34:91:0A 1500

private 10.11.11.2 10.11.11.0 On-link UNKNOWN 00:1A:A0:34:91:0C 1500

Interface information for node "P-HQ-CL-OR-11"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

public 192.168.26.11 192.168.26.0 On-link UNKNOWN 00:1D:09:0C:B4:CD 1500

public 192.168.26.13 192.168.26.0 On-link UNKNOWN 00:1D:09:0C:B4:CD 1500

private 10.11.11.1 10.11.11.0 On-link UNKNOWN 00:1D:09:0C:B4:CF 1500

Check: Node connectivity of subnet "192.168.26.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-12[192.168.26.12] P-HQ-CL-OR-12[192.168.26.14] yes

P-HQ-CL-OR-12[192.168.26.12] P-HQ-CL-OR-11[192.168.26.11] yes

P-HQ-CL-OR-12[192.168.26.12] P-HQ-CL-OR-11[192.168.26.13] yes

P-HQ-CL-OR-12[192.168.26.14] P-HQ-CL-OR-11[192.168.26.11] yes

P-HQ-CL-OR-12[192.168.26.14] P-HQ-CL-OR-11[192.168.26.13] yes

P-HQ-CL-OR-11[192.168.26.11] P-HQ-CL-OR-11[192.168.26.13] yes

Result: Node connectivity passed for subnet "192.168.26.0" with node(s) P-HQ-CL-OR-12,P-HQ-CL-OR-11

Check: TCP connectivity of subnet "192.168.26.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-11:192.168.26.11 P-HQ-CL-OR-12:192.168.26.12 passed

P-HQ-CL-OR-11:192.168.26.11 P-HQ-CL-OR-12:192.168.26.14 passed

P-HQ-CL-OR-11:192.168.26.11 P-HQ-CL-OR-11:192.168.26.13 passed

Result: TCP connectivity check passed for subnet "192.168.26.0"

Check: Node connectivity of subnet "10.11.11.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-12[10.11.11.2] P-HQ-CL-OR-11[10.11.11.1] yes

Result: Node connectivity passed for subnet "10.11.11.0" with node(s) P-HQ-CL-OR-12,P-HQ-CL-OR-11

Check: TCP connectivity of subnet "10.11.11.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-11:10.11.11.1 P-HQ-CL-OR-12:10.11.11.2 passed

Result: TCP connectivity check passed for subnet "10.11.11.0"

Interfaces found on subnet "192.168.26.0" that are likely candidates for a private interconnect are:

P-HQ-CL-OR-12 public:192.168.26.12 public:192.168.26.14

P-HQ-CL-OR-11 public:192.168.26.11 public:192.168.26.13

Interfaces found on subnet "10.11.11.0" that are likely candidates for a private interconnect are:

P-HQ-CL-OR-12 private:10.11.11.2

P-HQ-CL-OR-11 private:10.11.11.1

WARNING:

Could not find a suitable set of interfaces for VIPs

Result: Node connectivity check passed

Check: Time zone consistency

Result: Time zone consistency check passed

Checking shared storage accessibility...

Disk Partition Sharing Nodes (2 in count)

------------------------------------ ------------------------

\Device\Harddisk2\Partition1 P-HQ-CL-OR-12 P-HQ-CL-OR-11

Disk Partition Sharing Nodes (2 in count)

------------------------------------ ------------------------

\Device\Harddisk3\Partition1 P-HQ-CL-OR-12 P-HQ-CL-OR-11

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

\Device\Harddisk4 P-HQ-CL-OR-12 P-HQ-CL-OR-11

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

\Device\Harddisk5 P-HQ-CL-OR-12 P-HQ-CL-OR-11

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

\Device\Harddisk6 P-HQ-CL-OR-12 P-HQ-CL-OR-11

Shared storage check was successful on nodes "P-HQ-CL-OR-12,P-HQ-CL-OR-11"

Post-check for hardware and operating system setup was successful.

cluvfy stage -pre crsinst -n P-HQ-CL-OR-11,P-HQ-CL-OR-12 -verbose > c:\temp\precrsinst8.txt

Performing pre-checks for cluster services setup

Checking node reachability...

Check: Node reachability from node "P-HQ-CL-OR-11"

Destination Node Reachable?

------------------------------------ ------------------------

P-HQ-CL-OR-11 yes

P-HQ-CL-OR-12 yes

Result: Node reachability check passed from node "P-HQ-CL-OR-11"

Checking user equivalence...

Check: User equivalence for user "Inam"

Node Name Comment

------------------------------------ ------------------------

P-HQ-CL-OR-12 passed

P-HQ-CL-OR-11 passed

Result: User equivalence check passed for user "Inam"

Checking node connectivity...

Interface information for node "P-HQ-CL-OR-12"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

public 192.168.26.12 192.168.26.0 On-link UNKNOWN 00:1A:A0:34:91:0A 1500

private 10.11.11.2 10.11.11.0 On-link UNKNOWN 00:1A:A0:34:91:0C 1500

Interface information for node "P-HQ-CL-OR-11"

Name IP Address Subnet Gateway Def. Gateway HW Address MTU

------ --------------- --------------- --------------- --------------- ----------------- ------

public 192.168.26.11 192.168.26.0 On-link UNKNOWN 00:1D:09:0C:B4:CD 1500

private 10.11.11.1 10.11.11.0 On-link UNKNOWN 00:1D:09:0C:B4:CF 1500

Check: Node connectivity of subnet "192.168.26.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-12[192.168.26.12] P-HQ-CL-OR-11[192.168.26.11] yes

Result: Node connectivity passed for subnet "192.168.26.0" with node(s) P-HQ-CL-OR-12,P-HQ-CL-OR-11

Check: TCP connectivity of subnet "192.168.26.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-11:192.168.26.11 P-HQ-CL-OR-12:192.168.26.12 passed

Result: TCP connectivity check passed for subnet "192.168.26.0"

Check: Node connectivity of subnet "10.11.11.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-12[10.11.11.2] P-HQ-CL-OR-11[10.11.11.1] yes

Result: Node connectivity passed for subnet "10.11.11.0" with node(s) P-HQ-CL-OR-12,P-HQ-CL-OR-11

Check: TCP connectivity of subnet "10.11.11.0"

Source Destination Connected?

------------------------------ ------------------------------ ----------------

P-HQ-CL-OR-11:10.11.11.1 P-HQ-CL-OR-12:10.11.11.2 passed

Result: TCP connectivity check passed for subnet "10.11.11.0"

Interfaces found on subnet "192.168.26.0" that are likely candidates for a private interconnect are:

P-HQ-CL-OR-12 public:192.168.26.12

P-HQ-CL-OR-11 public:192.168.26.11

Interfaces found on subnet "10.11.11.0" that are likely candidates for a private interconnect are:

P-HQ-CL-OR-12 private:10.11.11.2

P-HQ-CL-OR-11 private:10.11.11.1

WARNING:

Could not find a suitable set of interfaces for VIPs

Result: Node connectivity check passed

Check: Total memory

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

P-HQ-CL-OR-12 15.9951GB (1.6772032E7KB) 922MB (944128.0KB) passed

P-HQ-CL-OR-11 15.9951GB (1.6772032E7KB) 922MB (944128.0KB) passed

Result: Total memory check passed

Check: Available memory

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

P-HQ-CL-OR-12 14.6672GB (1.5379624E7KB) 50MB (51200.0KB) passed

P-HQ-CL-OR-11 14.5223GB (1.5227736E7KB) 50MB (51200.0KB) passed

Result: Available memory check passed

Check: Swap space

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

P-HQ-CL-OR-12 31.9883GB (3.3542216E7KB) 15.9951GB (1.6772032E7KB) passed

P-HQ-CL-OR-11 31.9883GB (3.3542216E7KB) 15.9951GB (1.6772032E7KB) passed

Result: Swap space check passed

Check: Free disk space for "P-HQ-CL-OR-12:C:\Windows\temp"

Path Node Name Mount point Available Required Comment

---------------- ------------ ------------ ------------ ------------ ------------

C:\Windows\temp P-HQ-CL-OR-12 C 36.2099GB 1GB passed

Result: Free disk space check passed for "P-HQ-CL-OR-12:C:\Windows\temp"

Check: Free disk space for "P-HQ-CL-OR-11:C:\Windows\temp"

Path Node Name Mount point Available Required Comment

---------------- ------------ ------------ ------------ ------------ ------------

C:\Windows\temp P-HQ-CL-OR-11 C 42.631GB 1GB passed

Result: Free disk space check passed for "P-HQ-CL-OR-11:C:\Windows\temp"

Check: System architecture

Node Name Available Required Comment

------------ ------------------------ ------------------------ ----------

P-HQ-CL-OR-12 64-bit 64-bit passed

P-HQ-CL-OR-11 64-bit 64-bit passed

Result: System architecture check passed

Checking length of value of environment variable "PATH"

Check: Length of value of environment variable "PATH"

Node Name Set? Maximum Length Actual Length Comment

---------------- ------------ ------------ ------------ ----------------

P-HQ-CL-OR-12 yes 1023 336 passed

P-HQ-CL-OR-11 yes 1023 345 passed

Result: Check for length of value of environment variable "PATH" passed.

Checking for Media Sensing status of TCP/IP

Node Name Enabled? Comment

------------ ------------------------ ------------------------

P-HQ-CL-OR-12 no passed

P-HQ-CL-OR-11 no passed

Result: Media Sensing status of TCP/IP check passed

Starting Clock synchronization checks using Network Time Protocol(NTP)...

Checking daemon liveness...

Check: Liveness for "W32Time"

Node Name Running?

------------------------------------ ------------------------

P-HQ-CL-OR-12 yes

P-HQ-CL-OR-11 yes

Result: Liveness check passed for "W32Time"

Check for NTP daemon or service alive passed on all nodes

Result: Clock synchronization check using Network Time Protocol(NTP) passed

Checking if current user is a domain user...

Check: If user "Inam" is a domain user

Result: User "Inam" is a part of the domain "DOMAIN_NAME"

Check: Time zone consistency

Result: Time zone consistency check passed

Checking for status of Automount feature

Node Name Enabled? Comment

------------ ------------------------ ------------------------

P-HQ-CL-OR-12 yes passed

P-HQ-CL-OR-11 yes passed

Result: Check for status of Automount feature passed

Pre-check for cluster services setup was successful.

Note:If any errors are encountered, these issues should be investigated and resolved before proceeding with the installation.

No comments:

Post a Comment